When it comes to language models, few-shot performance is crucial. Being able to accurately predict and generate text with limited training examples is a challenge that researchers and developers have been working to overcome. In this article, we will explore the importance of Calibrate Before Use: Improving Few-Shot Performance of Language Models and how it can significantly enhance their few-shot performance.

Table of Contents

The Role of Calibration

Calibration plays a vital role in ensuring the reliability and accuracy of language models. It refers to the process of aligning the model’s predictions with the true probabilities of events in the real world. By calibrating a language model, we can fine-tune its output to match the expected distribution of the target domain, increasing its performance in a few-shot learning scenario.

Why Calibrate Language Models?

- Improved Accuracy: Calibrating a language model can substantially improve its accuracy in predicting rare or unseen events. By aligning the model’s confidence scores with the actual probabilities, we reduce the chances of unrealistic or overconfident predictions.

- Confidence Estimation: Calibrated language models provide reliable confidence estimates for their predictions. This is especially valuable in real-world applications where making informed decisions based on the model’s output is crucial.

- Generalization: Calibration enhances the generalization ability of language models. When calibrated, a model can generate sensible and reliable responses in few-shot learning scenarios, where there may be only a small number of training examples available.

How to Calibrate Language Models?

- Temperature Scaling: One common technique for calibrating language models is temperature scaling. This method adjusts the output distribution of the model by scaling the logits before applying the softmax function. By tuning the temperature parameter, we can control the sharpness of the output distribution and improve the model’s calibration.

- Platt Scaling: Platt scaling is another popular calibration method that involves training a separate calibration model on top of the language model. The calibration model maps the logits from the language model to calibrated probabilities. By fitting this calibration model on a held-out dataset, we can obtain well-calibrated predictions.

- Ensemble Methods: Ensembling multiple language models and combining their predictions can also help improve calibration. By averaging the predictions of different models, we can reduce the effects of individual biases and improve the overall calibration.

Evaluating Calibration

To ensure that language models are properly calibrated, various evaluation metrics can be employed:

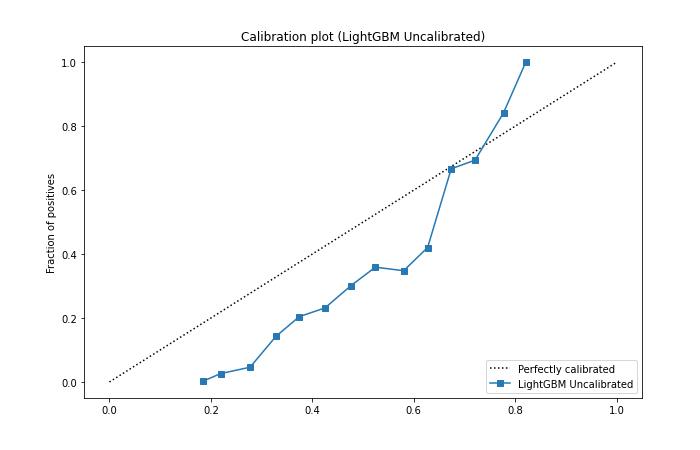

- Reliability Diagram: A reliability diagram plots the empirical confidence against the true accuracy of the model. A well-calibrated model’s points on the diagram should align closely to the diagonal line, indicating accurate predictions.

- Expected Calibration Error: Expected calibration error (ECE) measures the average difference between the predicted accuracy and the true accuracy across different confidence intervals. A lower ECE value indicates better calibration.

- Brier Score: The Brier score is a proper scoring rule that measures the overall accuracy and calibration of a probabilistic prediction. It rewards well-calibrated and accurate predictions and penalizes overconfident or underconfident predictions.

Conclusion

Calibrating language models before use is essential for improving their few-shot performance. By aligning the model’s predictions with the true probabilities and fine-tuning its output distribution, we can ensure accurate and reliable results even with limited training examples. Techniques like temperature scaling, Platt scaling, and ensembling can be employed to achieve optimal calibration. So, the next time you work with a language model, don’t forget to calibrate before use to enhance its performance and increase its trustworthiness.